HPE Goes After Enterprise AI With Nvidia GPU Engines

This week is another major conference for a server OEM, and that must mean it is another time for an OEM to announce its tight partnership with AI compute engine juggernaut Nvidia.

This week, it is Hewlett Packard Enterprise, which is trying to play both sides of the GPU market, focusing on AMD engines for at least some of its largest machines (often hybrid HPC/AI systems at the national labs in the United States and Europe) and Nvidia engines for its burgeoning AI aspirations – and backlog – among enterprise customers.

During Nvidia’s GTC 2024 developer conference in March, founder and CEO Jensen Huang stressed that his company was not simply a GPU maker or a cloud company, it’s a “platform company.” Nvidia has an expansive mix of hardware, from much-in-demand GPUs to supercomputers to its NVLink interconnect, software tools like CUDA and AI Enterprise, and packages for specific industries like healthcare, automotive, and financial services, all wrapped by its decade-long focus on AI.

The explosive growth of generative AI innovation and adoption since late 2022 and the accompanying sharp shift in that direction by almost every IT vendor has made Nvidia the go-to AI partner for hardware and software companies alike. At GTC, Nvidia announced expanded partnerships with companies like Google and SAP, and at the Dell Technologies World conference last month, Dell expanded its Dell AI Factory with Nvidia – also unveiled at GTC – to include new hardware.

Earlier this month, Cisco Systems introduced its Nexus HyperFabric AI cluster, a combination of its own AI networking and Nvidia’s accelerated computing and AI Enterprise software platform – along with storage from VAST Data – aimed at letting enterprises more easily run AI workloads.

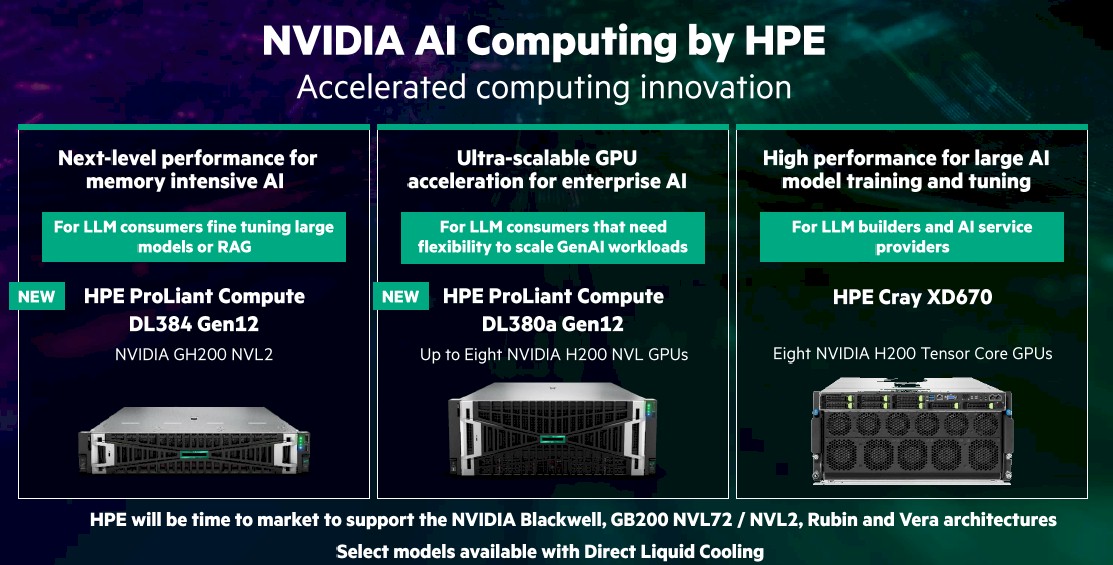

Now it is HPE’s turn. At its Discover 2024 show this week in Las Vegas, the companies announced Nvidia AI Computing by HPE, a collection of jointly developed AI offerings and go-to-market strategies aimed at driving AI computing by enterprises. At its foundation are three HPE servers, including the ProLiant DL380a Gen12 powered by eight Nvidia’s H200 NVL Tensor Core GPUs and the DL384 Gen 12 with GH200 NVL2 accelerators.

The Cray XD670 holds eight H200 NVL Tensor Cores and is aimed at enterprises building LLMs. HPE also will adopt upcoming Nvidia chips when they come to market, including its GB200 NVL72 and NVL2 GPUs and its Blackwell, Rubin, and Vera accelerators.

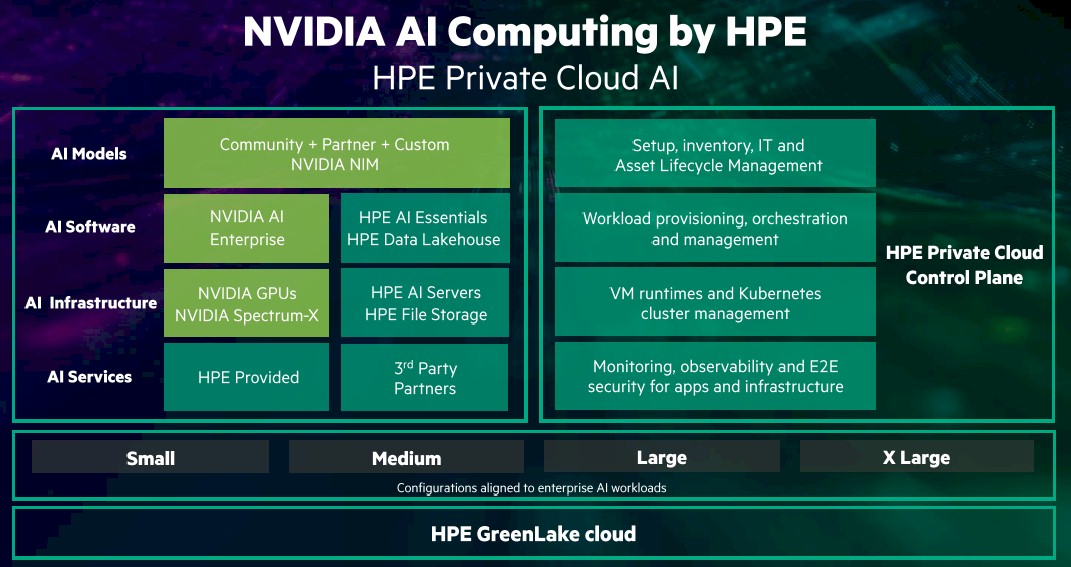

With this is the HPE Private Cloud AI, which includes Nvidia’s AI Enterprise software platform and Nvidia Inference Microservices (NIMs), which were announced at GTC and that essentially are virtualized containers that includes everything – including APIs, interference engines, and optimized AI models – enterprises need to build and run their inferencing workloads. HPE also brings its AI Essentials software and an infrastructure that includes Nvidia’s Spectrum-X Ethernet networking, HPE’s GreenLake for File Storage service, and the ProLiant servers.

Enterprises will have four configuration sizes – small, medium, large, and extra-large – to choose from, with correlating number of GPUs, storage space, networking capabilities, and power consumption. Enterprises can pick their configuration based on their needs, from the smaller size good for inferencing workloads to the largest for inferencing, retrieval-augmented generation (RAG), and fine-turning tasks.

Organizations will be able to spin up the HPE Private Cloud AI in three clicks, Fidelma Russo, executive vice president and general manager of hybrid cloud and CTO for HPE, said during a briefing before the show kicked off.

“Each configuration is modular and it allows you to expand or add capacity over time and maintain a consistent, cloud-managed experience with the HPE GreenLake cloud,” Russo said. “You can start with a few small model inferencing pods and you can scale to multiple use cases with higher throughputs, and you can have RAG or LLM fine tuning in one solution. We offer a choice of how you can consume it and a range of support, from self-managed to delivering it as a fully managed service.”

Neil MacDonald, HPE’s executive vice president and general manager of compute, HPC, and AI, added that as the HPE Private Cloud AI matures, the vendor will bring its liquid cooling into the mix.

“Direct liquid cooling is incredibly relevant for the future of AI platforms in the enterprise,” MacDonald said. “With a portfolio of over 300 patents, we span from liquid to air cooling, using chilled water supply from a facility to cool down the air-cooling systems adjacent to air cooled servers. We build systems with 70 percent direct liquid cooling that combine the direct liquid cooling and air cooling. And we have 100 percent direct liquid cooled systems, in which the coolant flows through a network of tubes and cold plates to extract heat directly from all components on the server.”

The joint HPE-Nvidia offerings are examples of what Jensen said is the ways AI is changing how things look and operate in the datacenter and the cloud.

“This is the greatest fundamental computing platform transformation in 60 years, from general-purpose computing to accelerated computing, from processing on CPUs to processing on CPUs plus GPUs, from instructions, engineered instructions to now large language models that are trained on data from instruction driven computing to now intention driven computing, every single layer of the computing stack has been transformed, as we exactly know very deeply well, and the type of applications that are now possible to write and develop are completely new,” Jensen said while standing next to HPE president and chief executive officer Antonio Neri’s on the keynote stage. “The way you develop the application is completely new, and so every single layer of the computing stack is going through a transition.”

This rapid shift to AI has been very good for Nvidia, which saw its first-quarter revenue jump 262% year-over-year to $26 billion and its market value reaching $3 trillion. Demand for its H100 Tensor Core GPUs – the go-to accelerators for AI workloads, with the company supplying as much as 90 percent of GPUs – continues to outpace supply.

Intel and AMD have GPUs, hyperscale cloud providers like Google, Microsoft, and Amazon Web Services (AWS) are making their own chips for AI, and there seems to be a growing lineup of startups that are looking for a foothold in the market. However, what some of these companies lack are the other factors – such as systems, software, and AI ecosystem – that Nvidia also brings to the table, which will make it difficult to knock the company off the top of the hill.

HPE’s MacDonald was asked during the press briefing why HPE is going all-in with Nvidia, given the partnership is also has with AMD for AI accelerators.

“Success in enterprise generative AI relies not just on accelerator silicon, but also on fabrics, on system design, on models, on software tooling, on the optimizations of those models at runtime,” he said. “We are thrilled to be working closely with Nvidia with a very strong set of capabilities that together enable us to have our enterprise customers be able to move forward much more quickly on their enterprise journeys. It’s key to notice that this HPE Private Cloud AI is not a reference architecture that would place the burden on the customer to assemble their AI infrastructure by cobbling together piece parts, whether those are GPUs or pieces of software or different connectivity. HPE and Nvidia have done the hard work for customers by co-developing a turnkey AI private cloud that is up and running in three clicks. That goes much beyond a question simply of an accelerator.”

Featuring highlights, analysis, and stories from the week directly from us to your inbox with nothing in between. Subscribe now